Modes and Mechanisms of Game-like Interventions in Intelligent Tutoring Systems

I conducted my PhD dissertation in the field of Education Technology from the Computer Science Department at Worcester Polytechnic Institute (WPI). My research was at the intersection of Educational Psychology, Game Design, Datamining and Artificial Intelligence.

Educational games and Intelligent Tutoring Systems (ITS) are two emerging developments within education technology. Games provide rich learning experiences and ITS deliver robust learning gains. I explored different ways we can combine these two fields to create learning systems that are engaging as well as effective. I created three game-like systems with cognition, metacognition and affect as their primary target and mode of intervention. Monkey’s Revenge is a game-like math tutor that offers cognitive tutoring in a game-like environment. The Learning Dashboard is a game-like metacognitive support tool for students using Mathspring, an ITS. Mosaic comprises a series of mini-math games that pop-up within Mathspring to enhance students' affect.

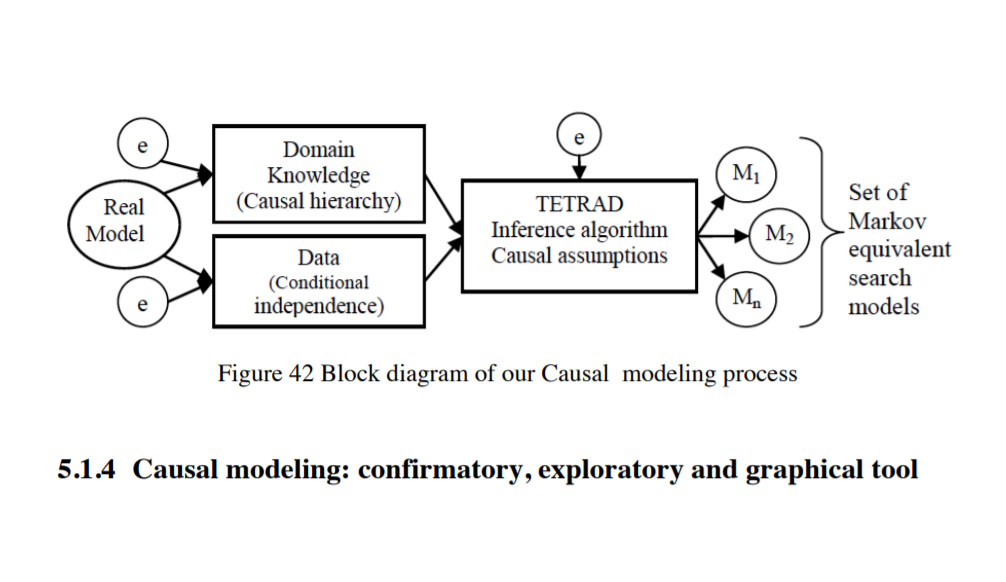

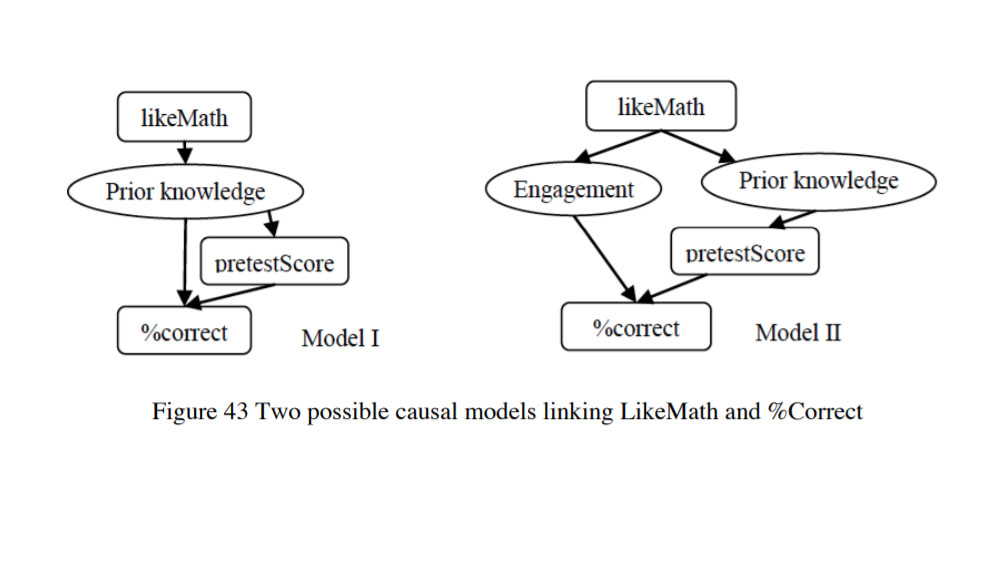

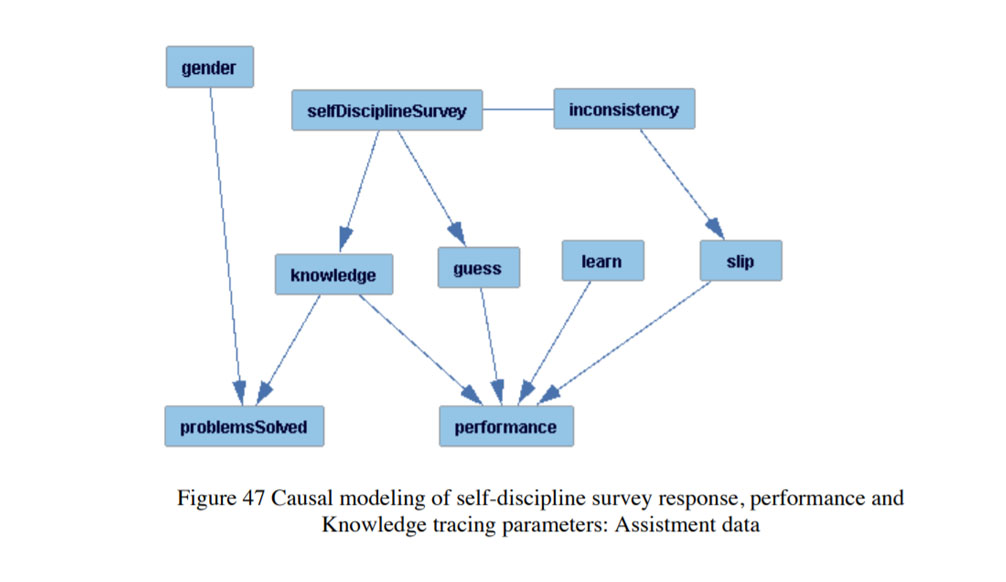

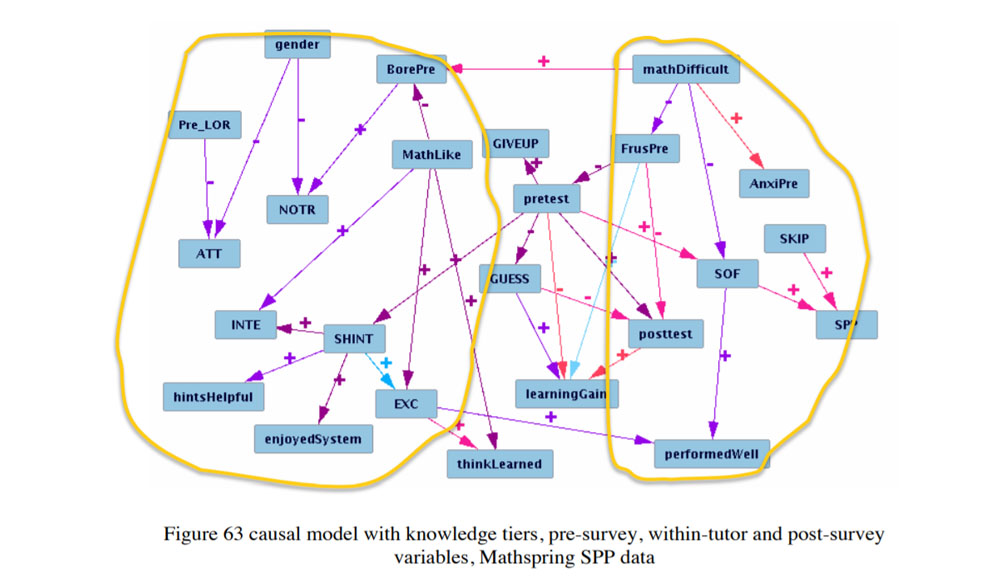

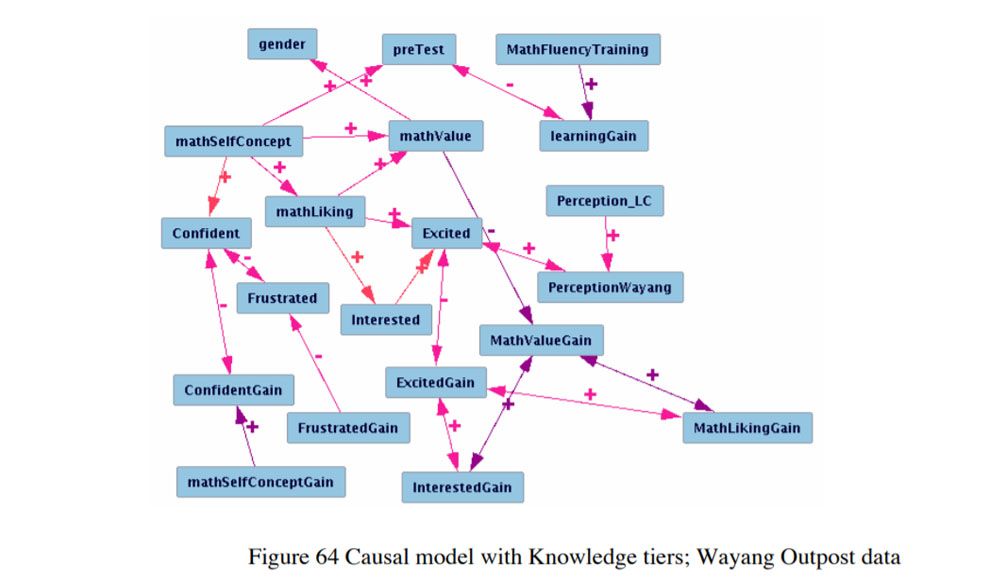

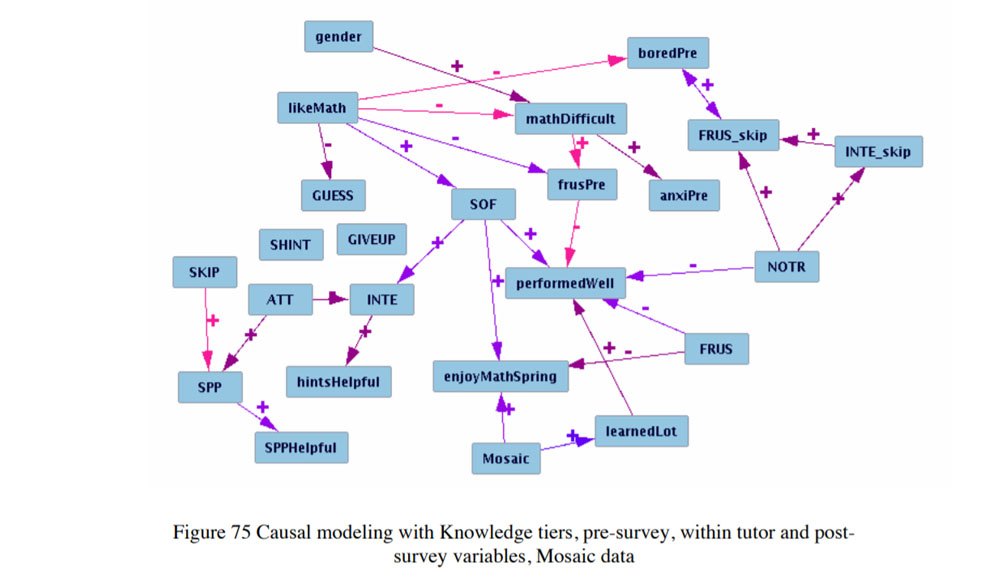

I ran multiple randomized controlled studies run in order to evaluate each of these three interventions. In addition, I used Causal Modeling to further explore the interrelationships between student’s incoming characteristics and predispositions, their mechanisms of interaction with the tutor, and the ultimate learning outcomes and perceptions of the learning experience.

I was very fortunate to work with Joseph E. Beck and Ivon Arroyo as my co-advisors. The first three years of my research was funded by the Fulbright Science and Technology PhD scholarship and the remaining by a Research Assistantship from The Center for Knowledge Communication, UMass Amherst.

Link to Dissertation | Link to Slides

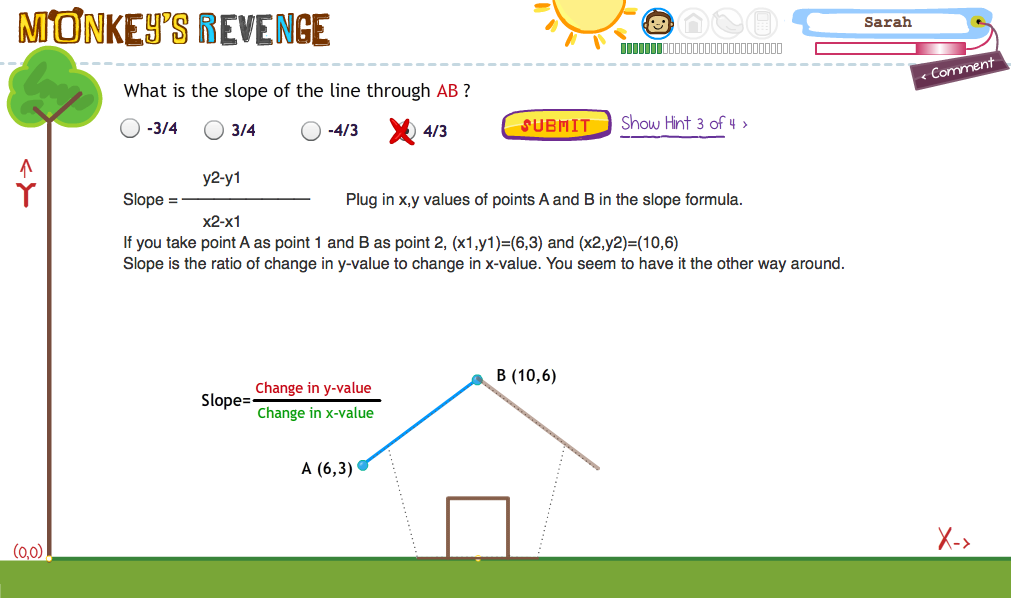

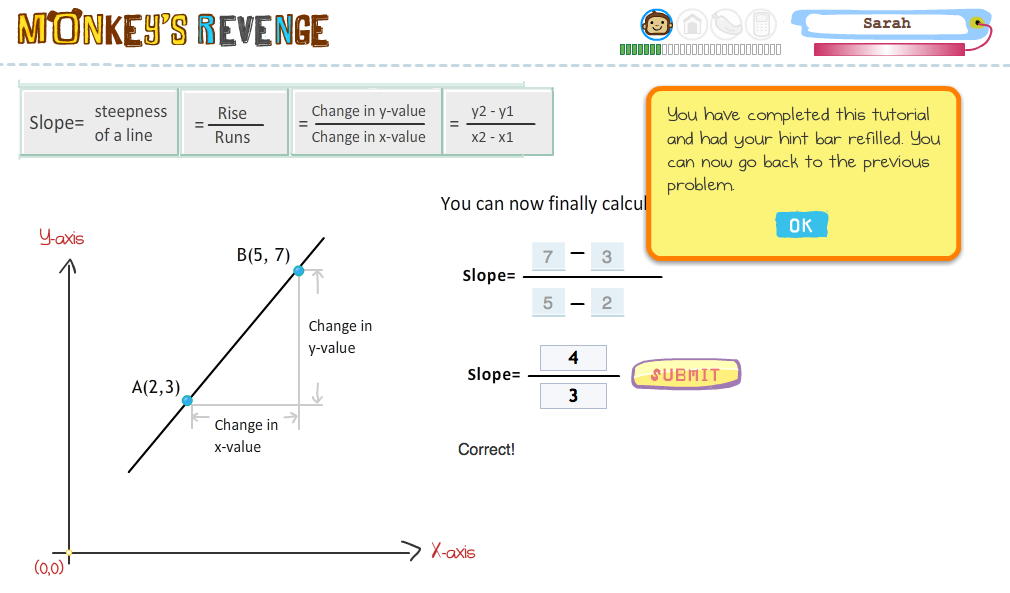

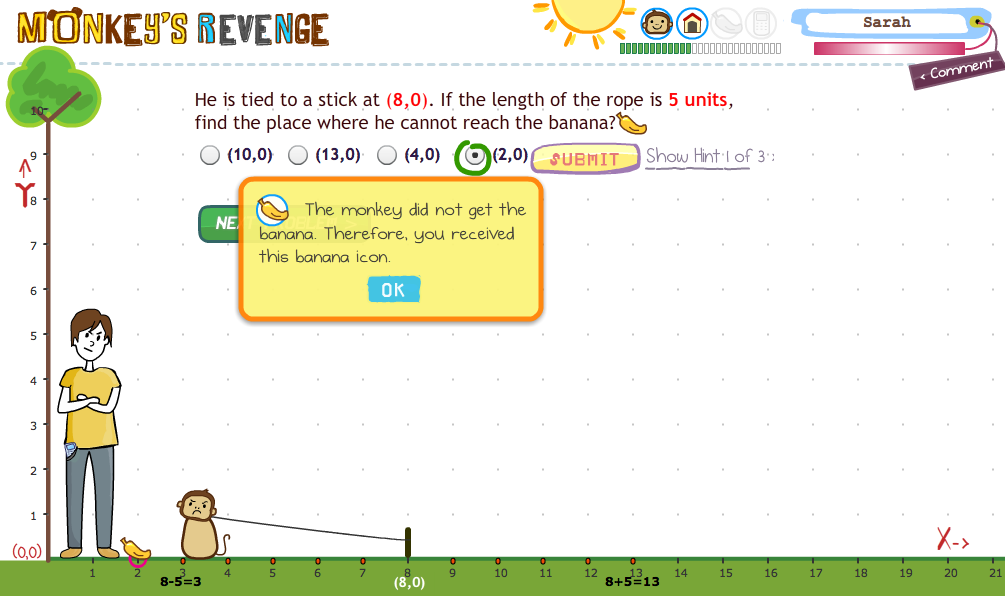

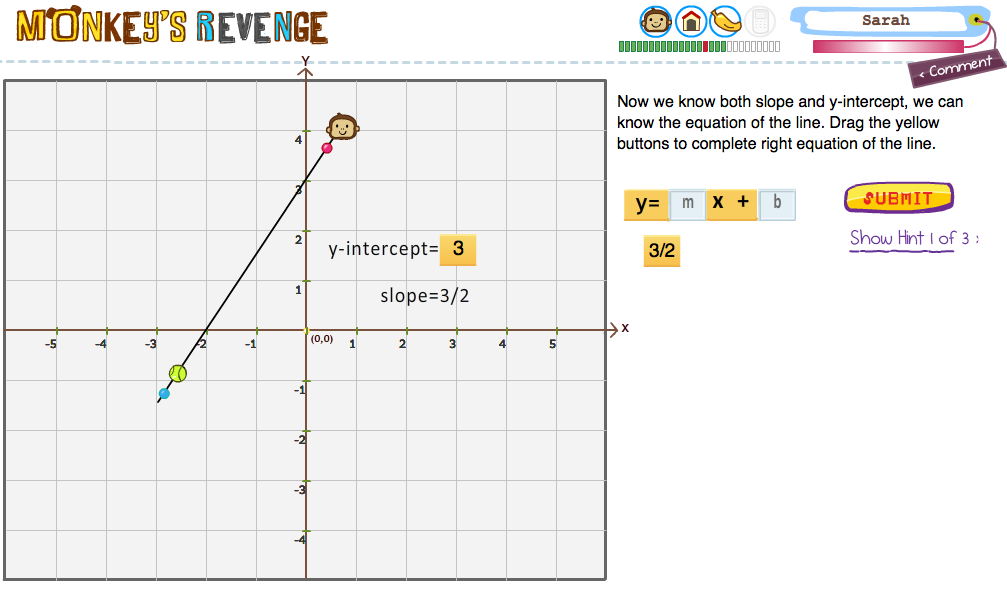

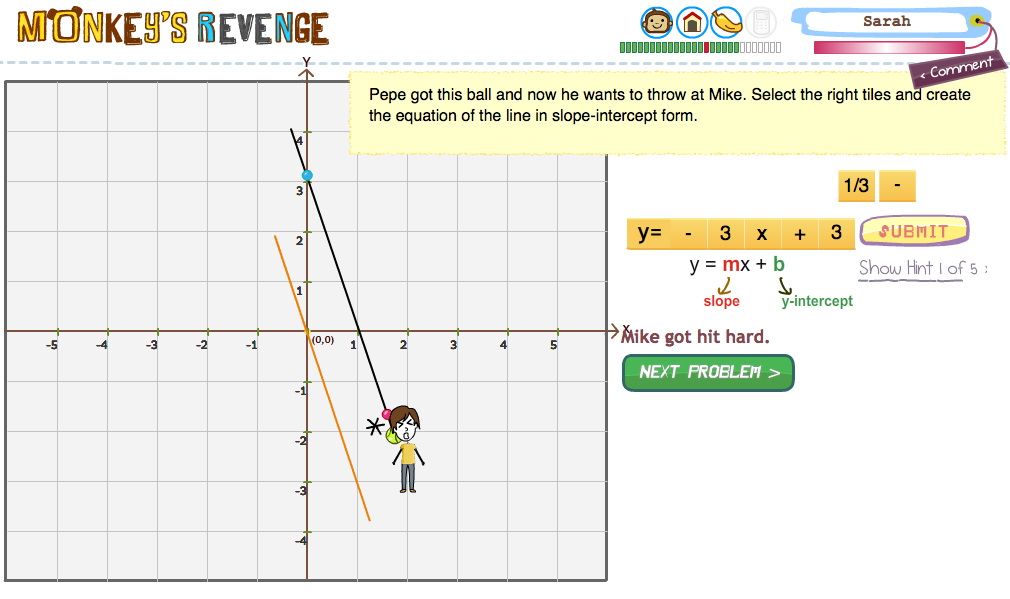

Monkey's Revenge

Monkey’s Revenge is a math-learning environment with game-like elements. This consists of a series of 8th grade coordinate geometry problems wrapped in a visual narrative. Students have to help story characters solve the problems in order to move the story forward. Similar to classic computer tutors, they get hints, bug messages, and tutorial sessions when they stumble upon problem and misconceptions.

Game elements can add fun and enhance learning but they can also overload students' working memory. I am taking a minimalist approach to find an optimal balance so that the game-like tutor would be engaging enough but not overwhelming or distracting.

Learning Dashboard

The Learning Dashboard is a game-like metacognitive support tool for students using Mathspring, a math intelligent tutoring system. Learning dashboard gives feedback on students’ performance encouraging them to reflect on their learning process and make appropriate choices.There are three distinct pages that display information at different granularity level:

1. A Personalized Math Tree (Domain level feedback on the student’s overall performance in Mathspring)

2. A Student Progress Page (Topic level feedback on each math topic, e.g.“fractions”)

3. Topic Details (Problem level feedback on each problem within a topic)

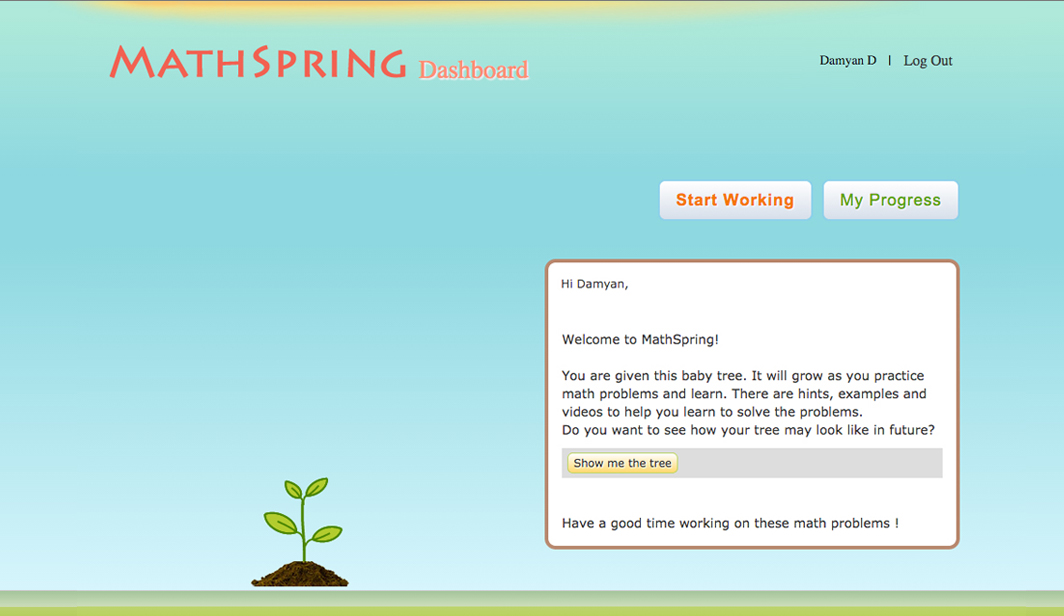

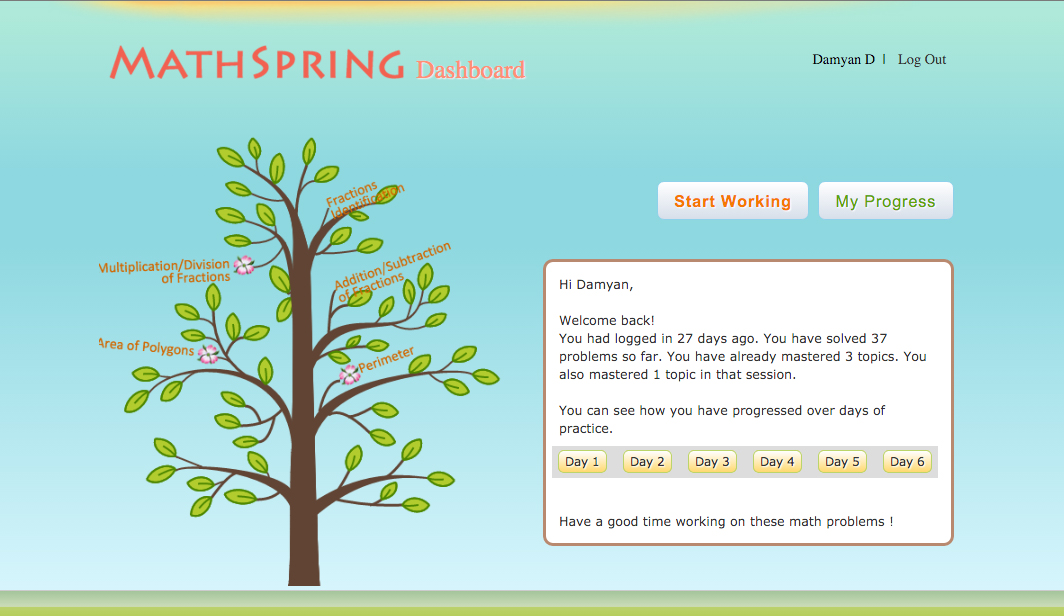

The Math Tree: A student’s overall performance in Mathspring is represented by a math tree. As each student logs in Mathspring for the first time, they are given a baby tree. As the student works on math problems in Mathspring, the baby tree grows. The tree generates new branches as the student work on new math topics. The tree gives blossoms for the topics that the student masters. Students can observe how

the tree grew over different days that they worked in the tutor, by clicking on buttons for each corresponding day that they used the system.

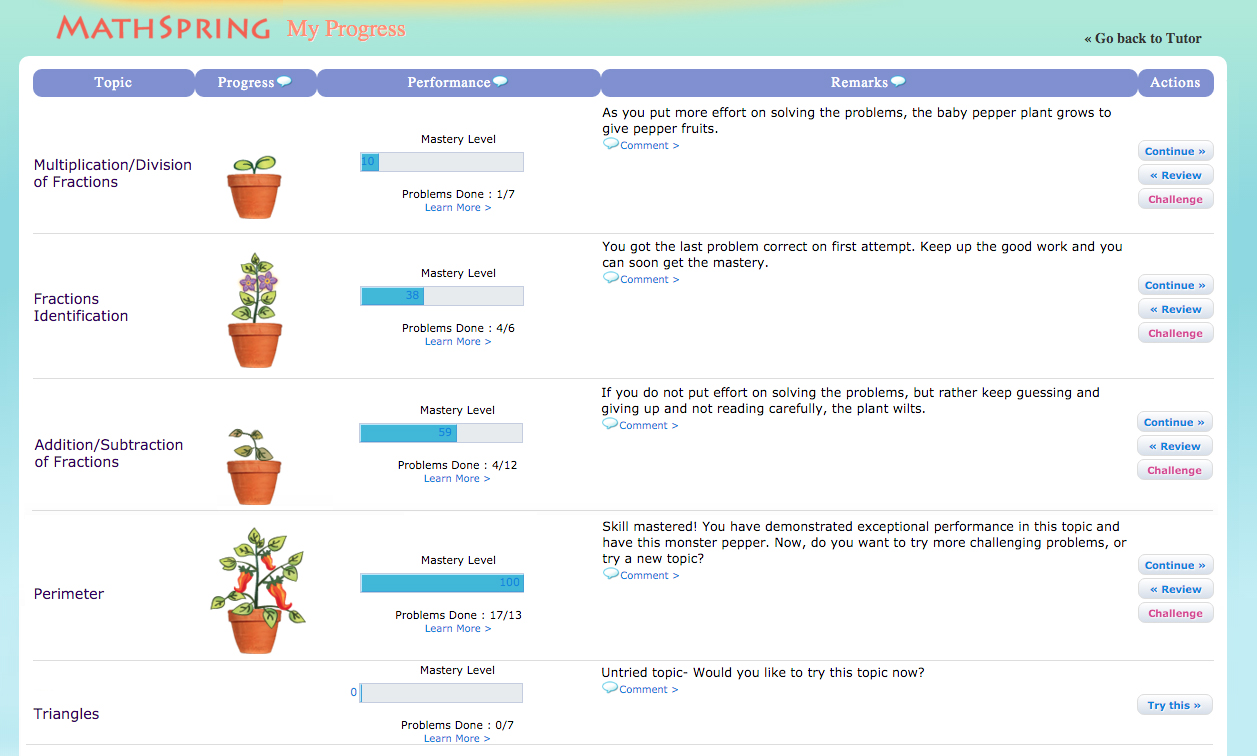

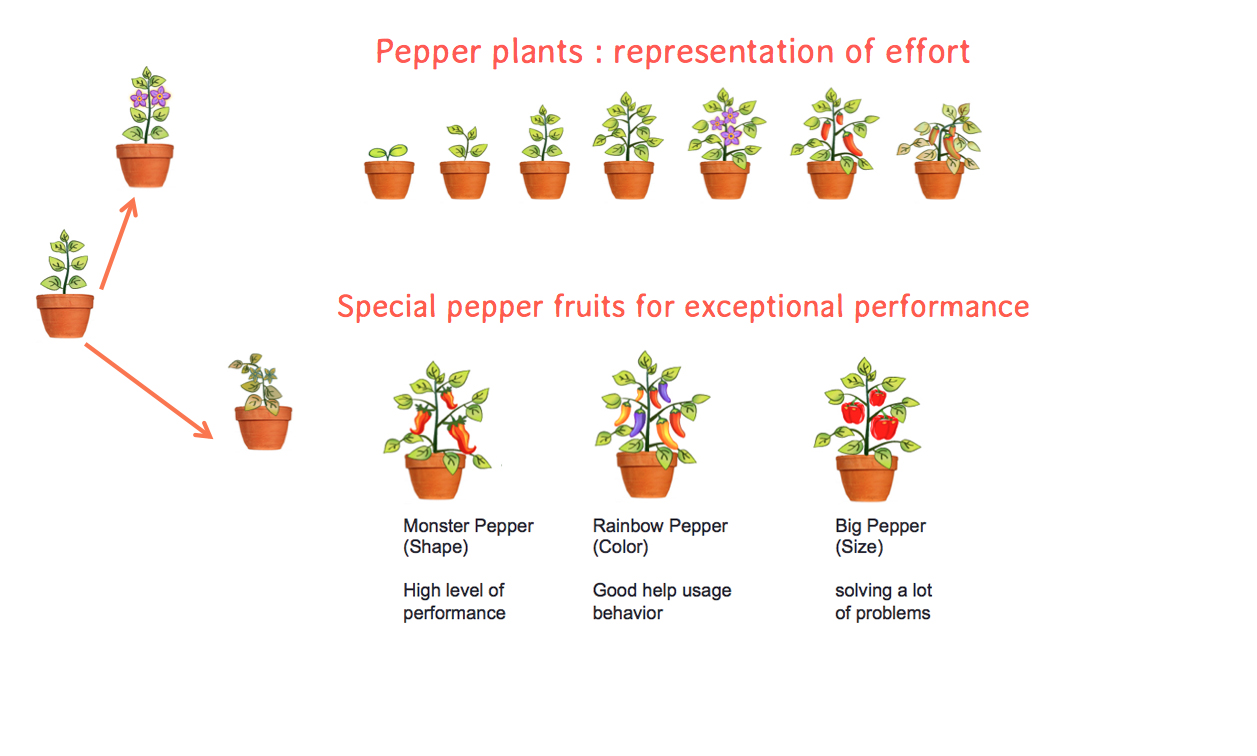

The Student Progress Page: The Student Progress Page (SPP) within MathSpring supports students to observe their performance and the tutor’s assessment and feedback. it provides an intuitive and appealing representation of effort using a potted plant. The plant grows as students put more effort into solving problems and bears fruit when a topic is mastered. The plant withers when there is lack of student effort.

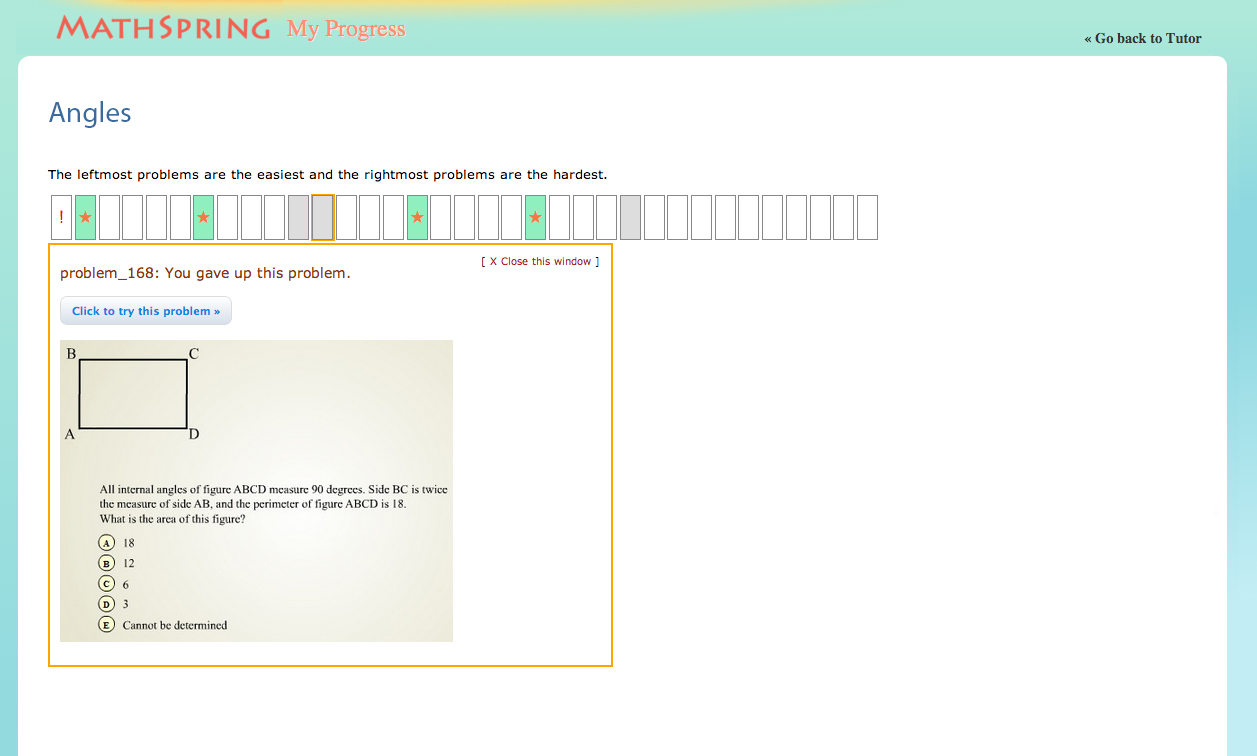

Topic Details: When student selects a topic in SPP, they go to a “Topic Details” page which shows the details of student performance within the topic. Each individual problem is represented by a domino that is marked according to their performance (for example: a ‘star’ to represent a problem correctly solved; an ‘H’ for problems solved with hints and an exclamation mark (‘!’) to represent disengaged behaviors).

Try Mathspring Tutor

Mosaic

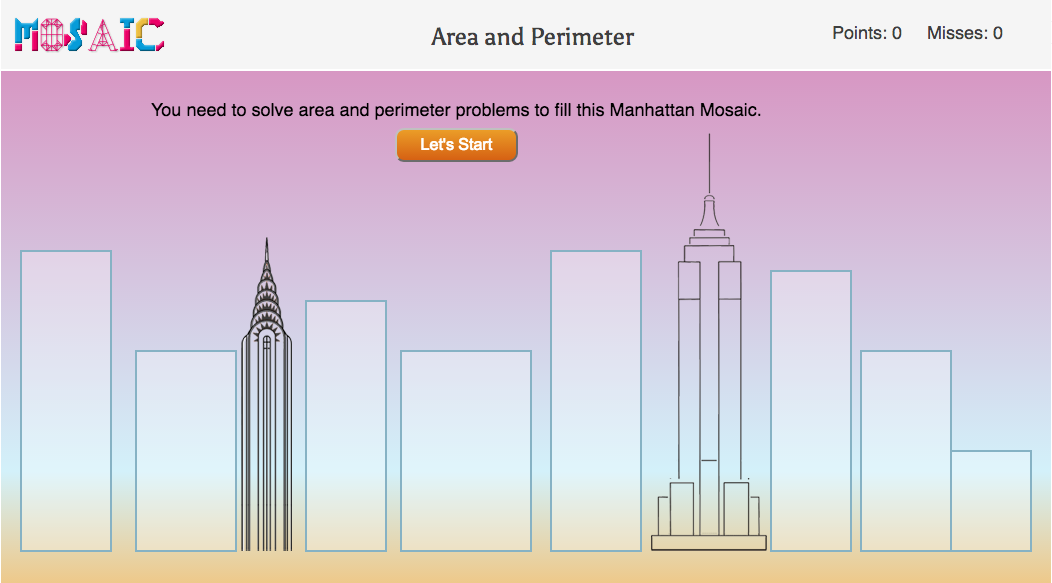

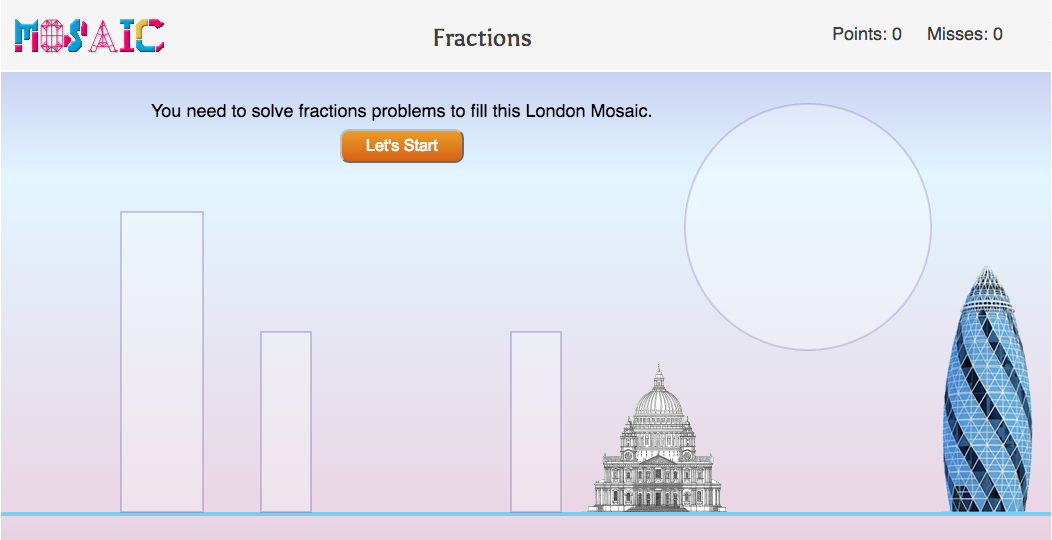

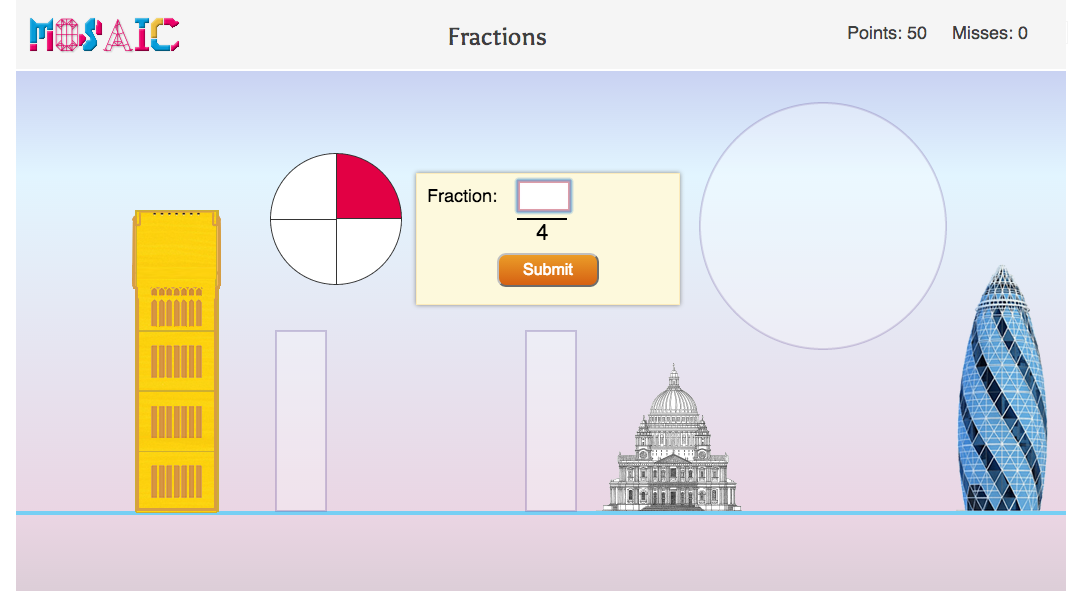

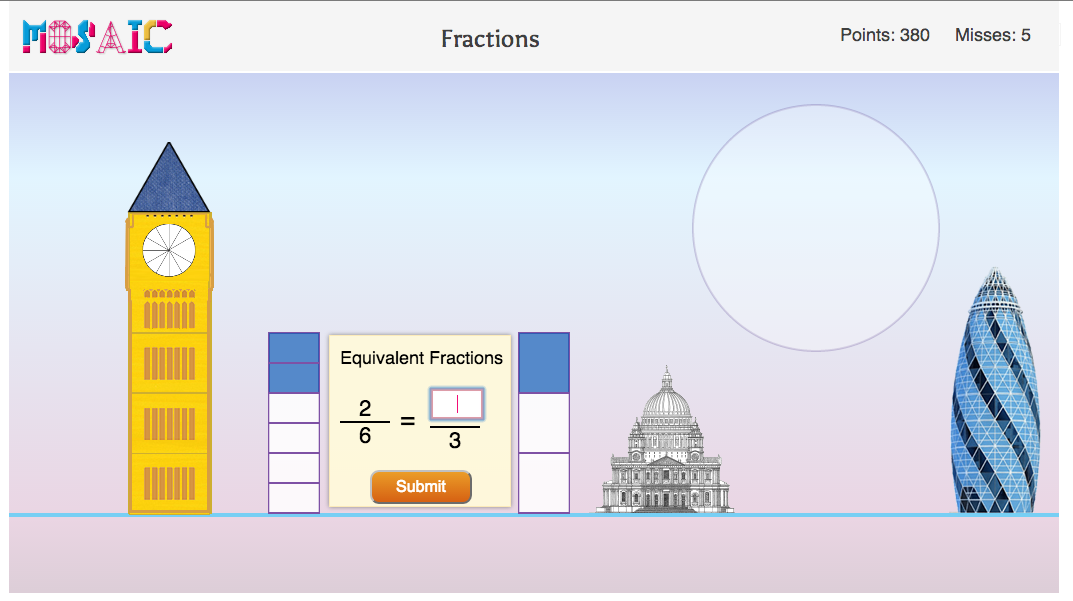

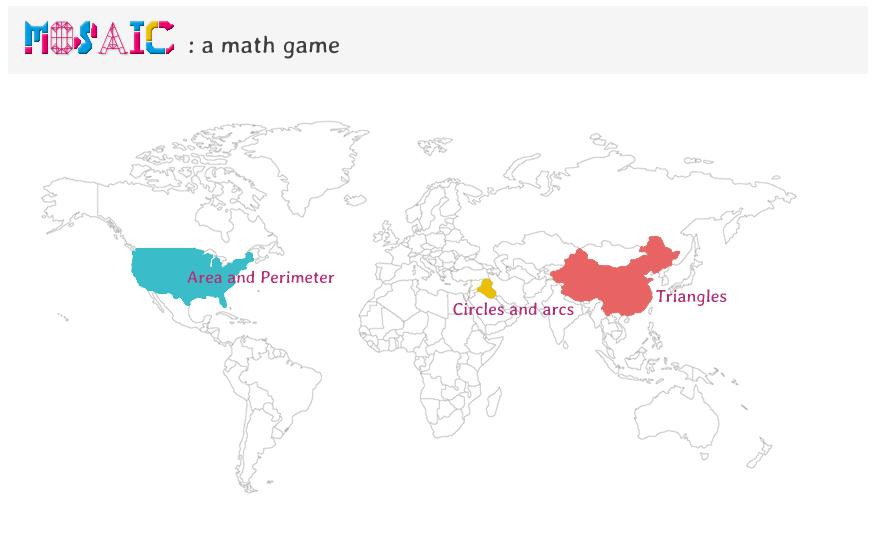

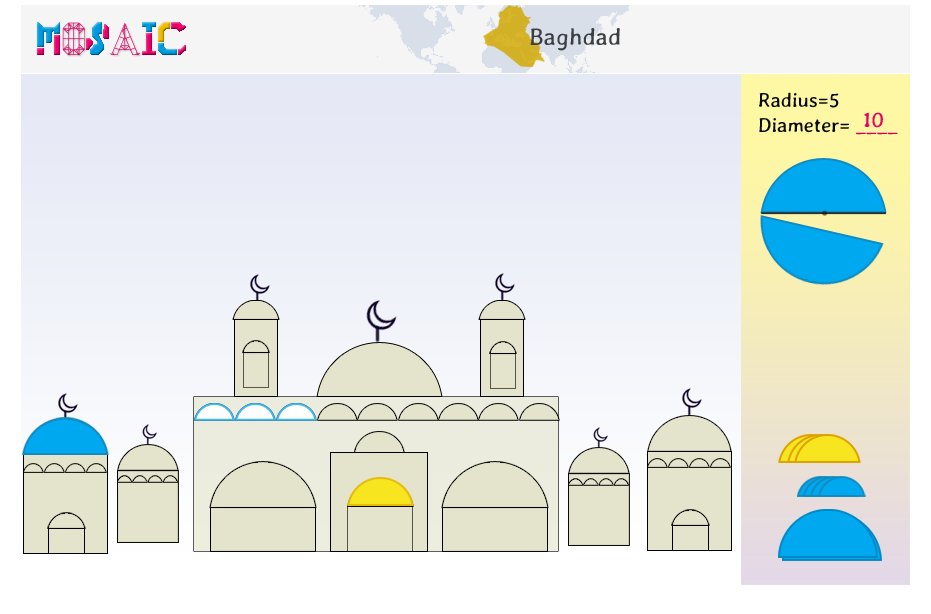

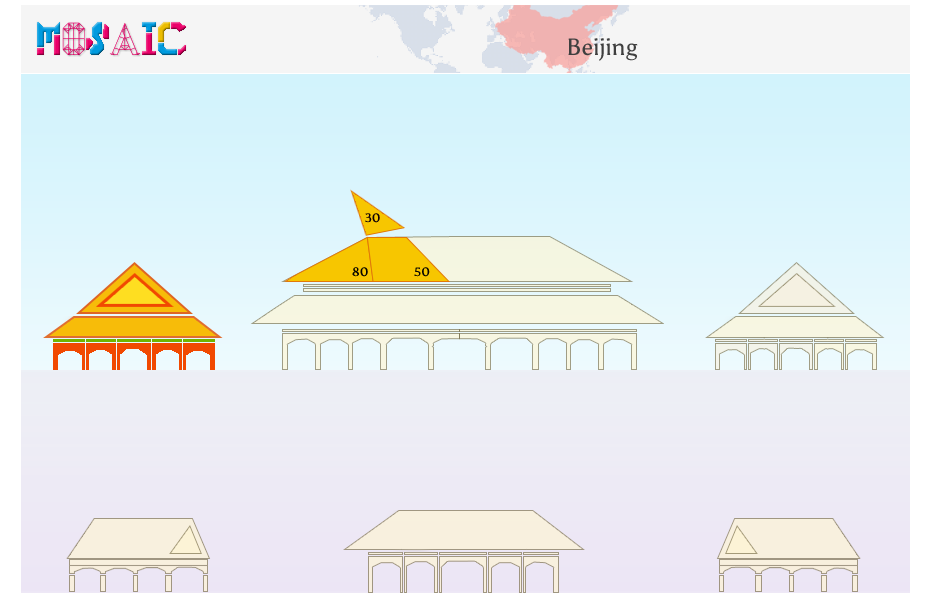

Mosaic comprises of a series of mini-math games that pop-up within the Mathspring tutor to enhance students' affective states.In Mosaic mini-games, players solve math problems to generate tiles inside a mosaic. We have created Mosaics of different cities. Each city is associated with a math skill. For example: New York is associated with ‘calculating area and perimeter’ and London with ‘Fractions’.

Try London: Fractions

Try Manhattan: Area and Perimeter

Causal Modeling

It is not sufficient knowing whether a particular intervention gives expected outcomes or not, we would also like to understand why and how? Why do certain students, but not others benefit from our interventions? If games generate learning gain,is it because they are better cognitive tools or are they effective because students are spending more time on task due to increased engagement?

I used causal modeling framework to integrate and analyze student data collected from surveys, logs and tests to understand the interrelationships between different student and tutor variables.

Findings suggest that students’ prior (incoming) attitude and preference and personality traits are a major influence of how they interact with the tutoring systems and the interventions themselves, as well as how they perceive the interventions. Students’ affect and their engagement behaviors and performance are highly related, creating a chain of cascading effects that suggest that the students who appreciate mathematics (and their math ability) more tend to feel more positively, engage more, and make the most out of the software. The casual models in general allowed to see all of the following: a) associations that validated my prior assumptions (student’s attitude and preferences prior to intervention affect how they interact and perceive the intervention); b) associations that provided new insights (among the four affective variables, confidence and frustration are more tightly linked with performance and ability whereas interest and excitement are more related to attitude and appreciation for math and the tutor); and c) associations that made me think about possible confounds (female students are solving more problems. But they neither do have higher prior knowledge nor are reporting higher self-discipline. Are they under-reporting their self-discipline or is solving more problems not a reflection of higher self-discipline? ). In general, even when causal models did not manage to provide confirmatory causal claims, they provided intuitions about the learning process and guided us for new explorations (student variables can be divided into performance oriented and enjoyment oriented clusters).